How to Automate Web Scraping with AI

Adrian Krebs,Co-Founder & CEO of Kadoa

Adrian Krebs,Co-Founder & CEO of KadoaWeb Scraping: A Solved Problem

Web scraping has been around for as long as the internet itself and is considered a solved problem. Programmatically extracting data from websites is a common practice across various industries and projects. Many business decisions rely on web data, such as price monitoring, market research, or lead generation.

Traditionally, web scraping involves the following steps:

- Programmatically load the website and fetch the HTML markup.

- Use CSS selectors or XPath to access sections of the markup containing relevant information.

- Do some formatting and clean-up.

- Map the data into a structured format and integrate it into a CSV file or a database.

The advent of single page applications made web scraping more challenging over time, as it required heavy solutions like Selenium or Pupeteer to render and load dynamic websites with Javascript.

Writing a rule-based scraper for each individual source has been the way to do web scraping since the beginning. There have been approaches to automated scraping/crawling in the past, but none of them made it beyond the concept/MVP stage because automation wasn't possible due to the large diversity in constantly changing sources.

The Challenges of Traditional Web Scraping

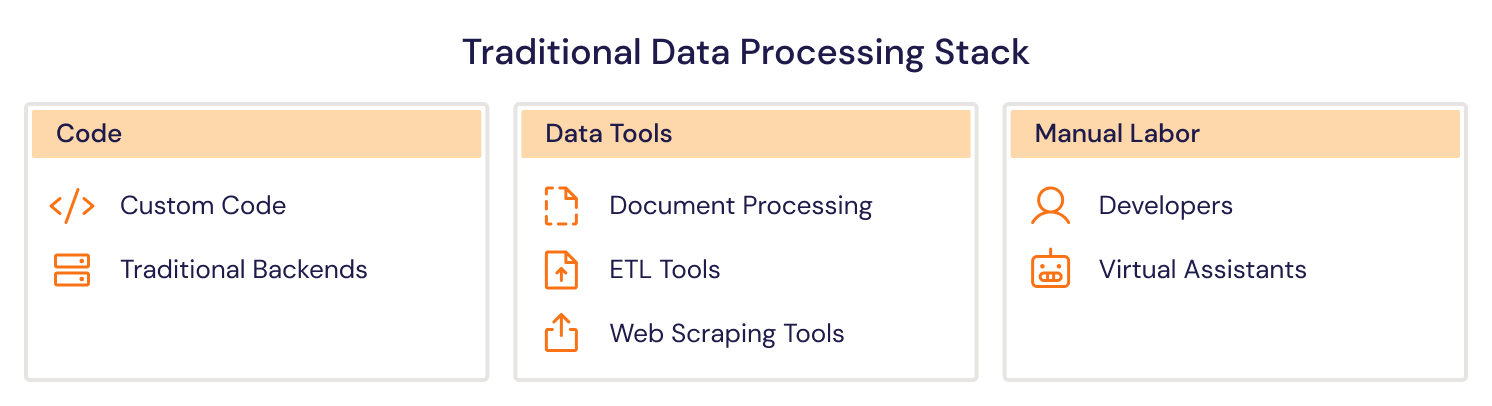

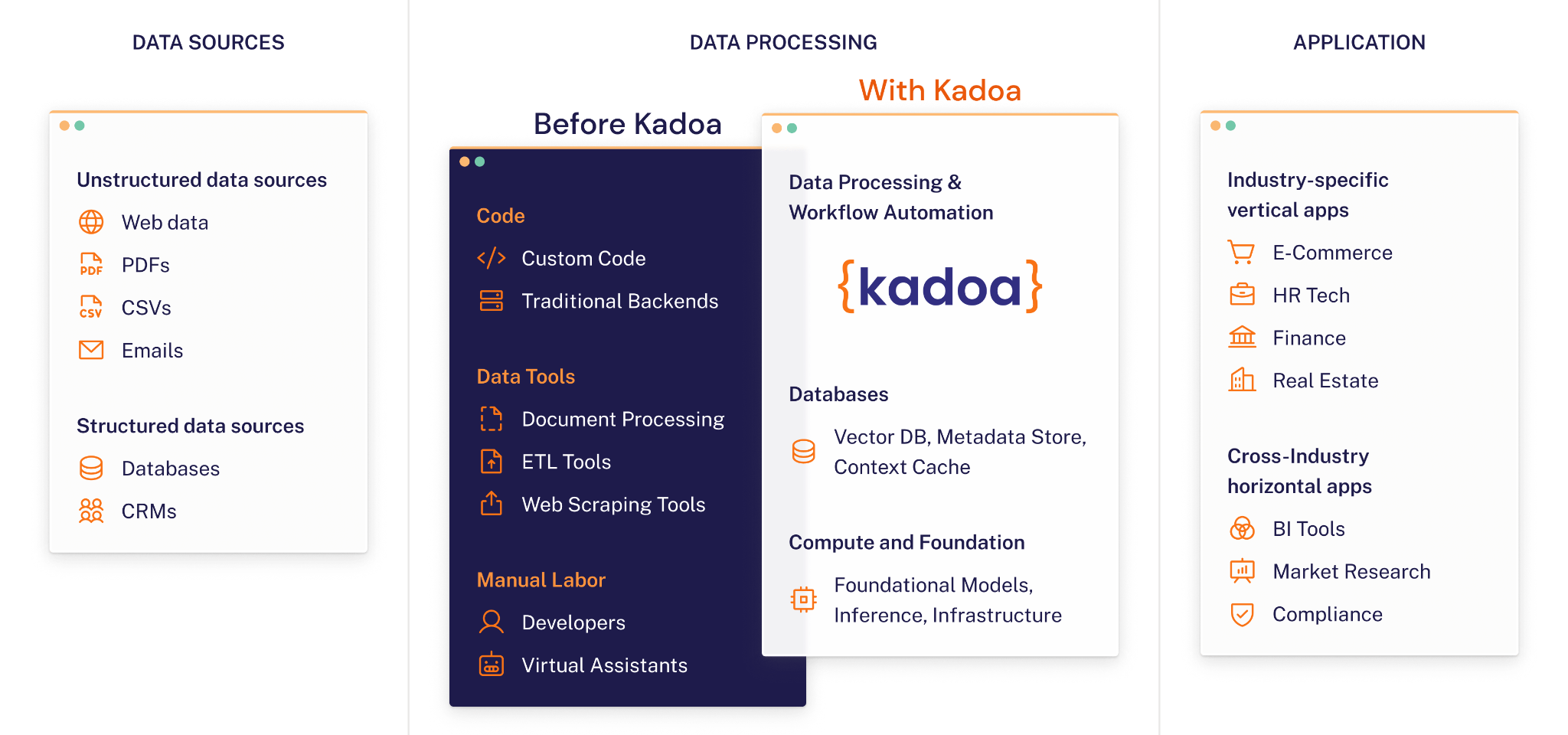

In the past, enterprises relied on a complex daisy chain of software, data systems, and human intervention to extract, transform, and integrate data from websites. In addition, there are thousands of virtual assistants in low-wage countries, who are doing nothing else than tedious manual data entry work (e.g. see Upwork).

Companies that do web scraping commonly face these universal challenges:

- High Maintenance Costs: Maintenance is the primary cost factor (especially as the number of sources increases.), mainly due to the need for manual interventions when websites change.

- Slow Development Cycles: The reliance on developer teams for creating and updating scrapers leads to quite slow turnaround times.

- Data Inconsistency: Varied sources and the need for constant updates often result in unreliable data

Having encountered these limitations of rule-based scraping methods in past projects, I began experimenting with the automation of extracting and transforming unstructured HTML when GPT-3 was released.

I believe that manual data processing work is wasted human capital and should be fully automated. It's tedious, it's boring, and it's error-prone .

Would it be possible to automate that kind of boring yet challenging task with AI?

Putting Web Scraping on Autopilot

AI should automate tedious and un-creative work, and web scraping definitely fits this description. We quickly realized that one of the superpowers of LLMs is not generating text or images, it's understanding and transforming unstructured data.

The future stack will involve AI-powered data workflows that automatically extract, process, and transform data into the desired format, regardless of the source.

We've started using LLMs to generate web scrapers and data processing steps on the fly that adapt to website changes. Using an LLM for every data extraction, would be expensive and slow, but using LLMs to generate the scraper code and subsequently adapt it to website modifications is highly efficient.

We've developed many small AI agents that basically just pick the right strategy for a specific sub-task in our workflows. In our case, an agent is a medium-sized LLM prompt that has a) context and b) a set of functions available to call.

- Website Loading: Automate proxy and browser selection to load sites effectively. Start with the cheapest and simplest way of extracting data, which is fetching the site without any JS or actual browser. If that doesn't work, the agent tries to load the site with a browser and a simple proxy, and so on.

- Navigation: Detect navigation elements and handle actions like pagination or infinite scroll automatically.

- Network Analysis: Identify desired data within network calls.

- Data transformation: Clean and map the data into the desired format. Fine-tuned small and performant LLMs are great at this task with a high reliability.

- Validation: Hallucination checks and verification that the data is actually on the website and in the right format. (this is mostly traditional code though)

Automatically Adapting to Website Changes

When a website changes it's structure or design, most existing rule-based solutions break. We had to come up with a process that enables maintenance-free web scraping at scale:

- Detect changes in a website's structure and determine if the extracted data is impacted.

- Automatically regenerate the necessary code to adapt to these changes.

- Compare and validate the new data against the previous dataset, notifying users of any schema modifications.

There are numerous other edge cases to consider, such as website unavailability, that we have to deal with in order to achieve maintenance-free scraping.

Lessons Learned from Making it Production-Ready

We quickly realized that doing this for a few data sources with low complexity is one thing, doing it for thousands of websites in a reliable, scalable, and cost-efficient way is a whole different beast.

The integration of tightly constrained agents with traditional engineering methods effectively solved this issue for us.

Other important lessons we learned:

Self-Hosting LLMs: The first major insight was the advantage of self-hosting open source LLMs for small and clear defined data transformation tasks. This allowed us to reduce costs significantly, increase performance, and avoid limitations of third-party APIs.ta

Evaluation Data: We quickly realized that we need a broad set of training and evaluation data to test our models against a variety of historic snapshots of websites. The web is so diverse that we need test cases across industries and use cases to make our AI generalize well.

Structured Output Stability: Token limits and JSON output stability was a challenge at the beginning. Thanks to the fast advances in AI, improvements in our pre and postprocessing, this became less and less of an issue.

The Future Landscape

Dealing with a lot of unstructured and diverse data is a great application for AI, and I believe we'll see many automations in the data processing and RPA space in the near future.

This will have broad implications on businesses:

- Cost reduction

- Efficiency gains

- Better data-driven decision making

In economically challenging times, the demand for automating repetitive and costly tasks has never been higher, with AI serving as the pivotal technology enabler. Hopefully developers and VAs around the world will be able to spend their time on more interesting tasks than dealing with web scrapers.

Adrian is the Co-Founder and CEO of Kadoa. He has a background in software engineering and AI, and is passionate about building tools that make data extraction accessible to everyone.

Related Articles

How AI Is Changing Web Scraping in 2026

Explore how AI is changing web scraping in 2026. From automation and data quality to compliance to scalability and real-world use cases.

The Top AI Web Scrapers of 2026: An Honest Review

In 2026, the best AI scrapers don't just write scripts for you; they fix them when they break. Read on for an honest assessment of the best AI web scrapers in 2026, including what they can and cannot do.

From Scrapers to Agents: How AI Is Changing Web Scraping

We spoke with Dan Entrup about how web scraping in finance hasn't evolved much in 20+ years and how AI is changing that now.