Llama 3.2 may have just killed proprietary AI models

Adrian Krebs,Co-Founder & CEO of Kadoa

Adrian Krebs,Co-Founder & CEO of KadoaWhen Llama 3 was released in April 2024, it marked an inflection point where open source models caught up with proprietary models (as noted in the original version of this article).

With the introduction of the new multimodal Llama 3.2 LLMs, open source models are not only matching but are starting to surpass proprietary models.

Here is a comparison with GPT-4o-mini based on Meta's benchmarks:

Metric | GPT-4o-mini | Llama 3.2 90B | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Metric | GPT-4o-mini | Llama 3.2 90B | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Metric | GPT-4o-mini | Llama 3.2 90B | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Metric | GPT-4o-mini | Llama 3.2 90B | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Now, you might wonder why Meta is giving these very powerful models away for free.

Mark Zuckerberg's recent letter provides insight into Meta's strategy and vision for open source AI.

He argues that open source is not just good for developers and Meta, but essential for a positive AI future.

This confirms Meta's intention to disrupt proprietary model players.

Meta vs Proprietary Model Players

Meta's goal from the start was to target OpenAI with a "scorched earth" approach by releasing powerful open models to disrupt the competitive landscape.

Meta can likely outspend any AI lab on compute and talent:

- OpenAI makes an estimated revenue of $2B and is likely unprofitable. Meta generated a revenue of $134B and profits of $39B in 2023.

- Meta's compute resources likely outrank OpenAI by now.

- Open source likely attracts better talent and researchers.

As the landscape shifts, we may see moves from other players.

Microsoft might consider acquiring OpenAI to keep pace. Google has similar budget and talent and is also expanding into the open model space.

The Winners: Developers and AI Startups

The big winners here are developers and AI startups:

-

No more vendor lock-in

-

Instead of just wrapping proprietary API endpoints, developers can now integrate AI deeply into their products in a very cost-effective and performant way

-

Price race to the bottom with near-instant LLM responses at very low prices are on the horizon

It feels like a very exciting time to build a startup as your product automatically becomes better, cheaper, and more scalable with every major AI advancement.

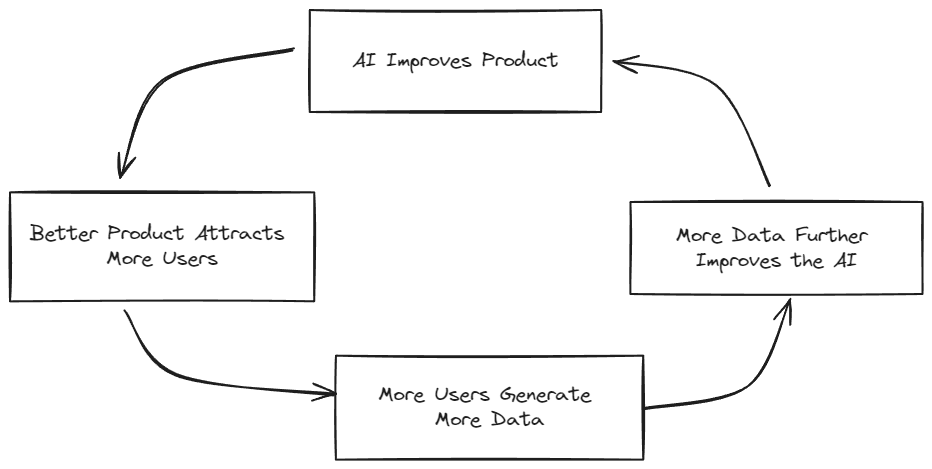

This leads to a powerful flywheel effect for AI startups.

The release of Llama 3.2 marks the democratization of AI, but it's probably too early to declare the death of proprietary models.

For now though, it looks like there is no defensible moat unless a company makes a breakthrough in model training. We're reaching the limits of throwing more data at more GPUs.

These are truly exciting (and overwhelming) times!

Adrian is the Co-Founder and CEO of Kadoa. He has a background in software engineering and AI, and is passionate about building tools that make data extraction accessible to everyone.