The Rise of Unstructured Data ETL

Adrian Krebs,Co-Founder & CEO of Kadoa

Adrian Krebs,Co-Founder & CEO of KadoaWhen Clive Humby coined the term "data is the new oil" in 2006, he meant that data, like oil, isn't useful in its raw state. It needs to be refined, processed, and turned into something useful; its value lies in its potential.

Fast-forward to today, this quote is more true than ever as we're drowning in data, 80% of which is unstructured and largely untapped.

- 80-90% of the world’s data is unstructured in formats like HTML, PDF, CSV, or email

- 90% of it produced over the last two years alone

- The rise of LLM and RAG applications increases the demand for data

However, preparing unstructured data is a major bottleneck. A survey shows that data scientists spend nearly 80% of their time preparing data for analysis. As a result, a lot of the data that companies produce goes unused.

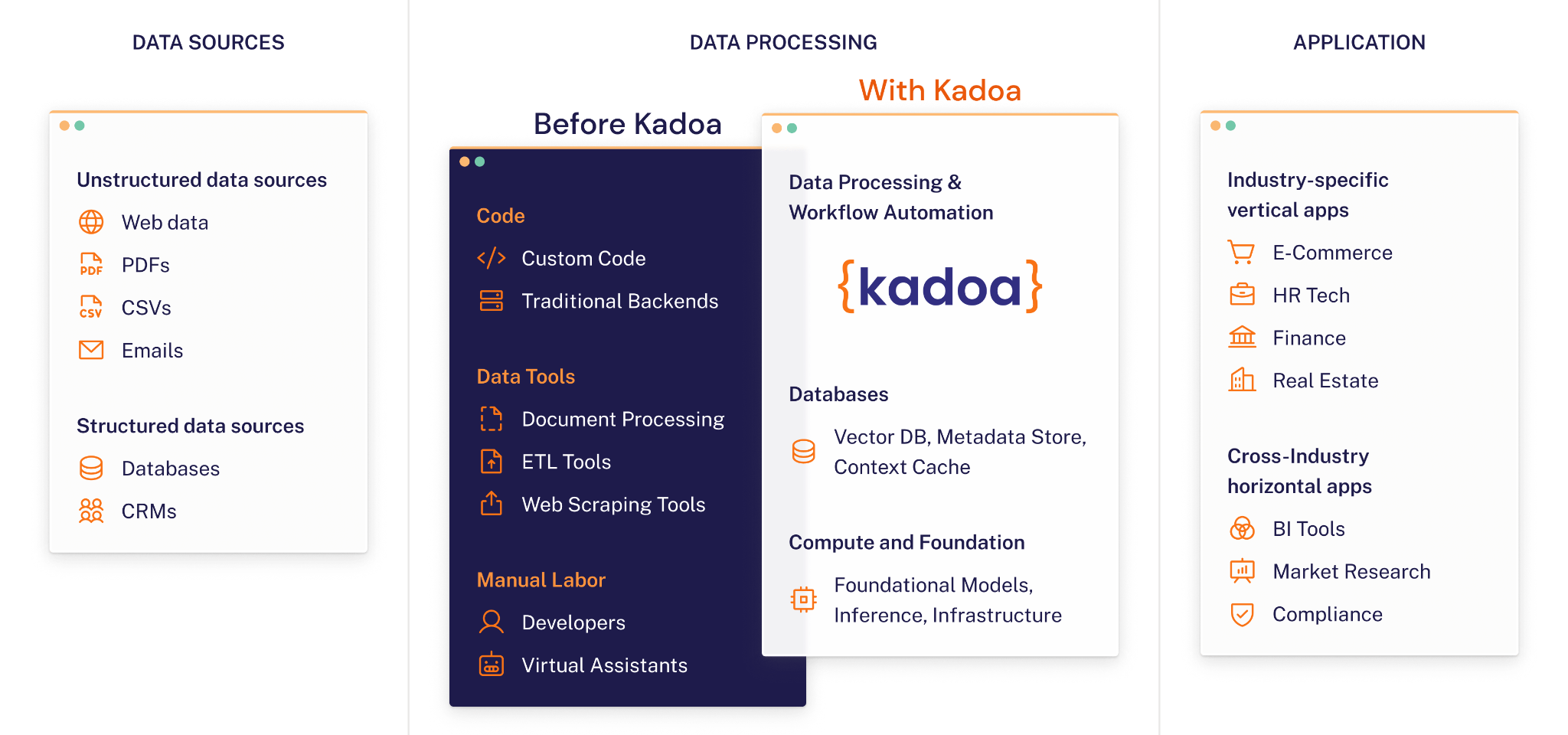

In the past, enterprises relied on a complex daisy chain of software, data systems, and human intervention to extract, transform, and integrate data.

This is where unstructured data ETL comes in - a new paradigm for automating the end-to-end processing of unstructured data at scale.

What is Unstructured Data ETL?

Unstructured data ETL is an innovative approach to automating the end-to-end processing of unstructured data at scale.

It combines the power of AI with traditional data engineering to extract, transform, and load data from diverse sources and formats.

The key components of unstructured data ETL are:

- Data Sources: Unstructured data sources such as websites, PDFs, CSVs, emails, presentations.

- Extract: Automatically extract the data from the sources

- Transform: Automatically clean and format the data into structured formats

- Load: Deliver transformed data into databases or via APIs for downstream applications

- Data Consumers: Data warehouses, BI tools, or business applications

Traditionally, processing unstructured data has been a manual task that requires developers to use various tools, such as web scrapers and document processors, and to write custom code for extracting, transforming, and loading data from each individual source. This approach is time-consuming, labor-intensive, and prone to errors.

Large language models efficiently handle the complexity and variability of unstructured data sources, largely automating this process.

When a website or PDF layout changes, rule-based systems often break. Using AI, we can adapt to these changes and make data pipelines more resilient and maintenance-free.

Here is an overview of how traditional unstructured data processing is now replaced with AI-powered ETL solutions:

Use Cases of Unstructured Data ETL

Automated unstructured data ETL is valuable for automating traditional data processing and becomes increasingly important for preparing data for AI usage.

Traditional use cases:

- Web scraping for price monitoring, lead generation, and market research

- Extracting data from PDFs for financial analysis, legal compliance

- Processing emails and support tickets for sentiment analysis and trend detection

AI data preparation use cases:

- Powering RAG architectures for question-answering over large unstructured datasets

- Efficiently extract data from diverse PDFs with high accuracy

- Automatically collect and prepare web data for LLM usage

The AI data preparation market is expected to experience significant growth in the coming years, and unstructured data ETL will play a crucial role.

Conclusion

Unstructured data ETL is the missing piece in the modern data stack. Data pipelines that took weeks to build, test, and deploy, can now be automated end-to-end in a fraction of the time with the use of tools like unstructured.io or Kadoa.

For the first time, we have turnkey solutions for handling unstructured data in any format and from any source.

Enterprises that apply this new paradigm will be able to fully leverage their data assets (e.g. for LLMs), make better decisions faster, and operate more efficiently.

Data ist (still) the new oil, but now we have the tools to refine it efficiently.